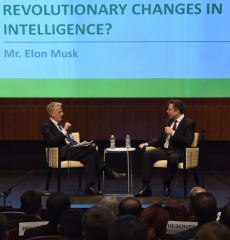

Two years ago, the AFCEA Intelligence Committee (I’m a member) invited Elon Musk for a special off-the-record session at our annual classified Spring Intelligence Symposium. The Committee assigned me the task of conducting a wide-ranging on-stage conversation with him, going through a variety of topics, but we spent much of our time on artificial intelligence (AI) – and particularly artificial general intelligence (AGI, or “superintelligence”).

I mention that the session was off-the-record. In my own post back in 2015 about the session, I didn’t  characterize Elon’s side of the conversation or his answers to my questions – but for flavor I did include the text of one particular question on AI which I posed to him. I thought it was the most important question I asked…

characterize Elon’s side of the conversation or his answers to my questions – but for flavor I did include the text of one particular question on AI which I posed to him. I thought it was the most important question I asked…

(Our audience that day: the 600 attendees included a top-heavy representation of the Intelligence Community’s leadership, its foremost scientists and technologists, and executives from the nation’s defense and national-security private-sector partners.)

Here’s that one particular AI question I asked, quoted from my blogpost of 7/28/2015:

“AI thinkers like Vernor Vinge talk about the likelihood of a “Soft takeoff” of superhuman intelligence, when we might not even notice and would simply be adapting along; vs a Hard takeoff, which would be a much more dramatic explosion – akin to the introduction of Humans into the animal kingdom. Arguably, watching for indicators of that type of takeoff (soft or especially hard) should be in the job-jar of the Intelligence Community. Your thoughts?”

Months after that AFCEA session, in December 2015 Elon worked with Greg Brockman, Sam Altman, Peter Thiel and several others to establish and fund OpenAI, “a non-profit AI research company, discovering and enacting the path to safe artificial general intelligence (AGI).” OpenAI says it has a full-time staff of 60 researchers and engineers, working “to build safe AGI, and ensure AGI’s benefits are as widely and evenly distributed as possible.”

Fast-forward to today. Over the weekend I was reading through a variety of AI research and sources, keeping  current in general for some of my ongoing consulting work for Deloitte’s Mission Analytics group. I noticed something interesting on the OpenAI website, specifically on a page it posted several months ago labelled “Special Projects.”

current in general for some of my ongoing consulting work for Deloitte’s Mission Analytics group. I noticed something interesting on the OpenAI website, specifically on a page it posted several months ago labelled “Special Projects.”

There are four such projects listed, described as “problems which are not just interesting, but whose solutions matter.” Interested researchers are invited to apply for a position at OpenAI to work on the problem – and they’re all interesting, and could lead to consequential work.

But the first Special Project problem caught my eye, because of my question to Musk the year before:

- Detect if someone is using a covert breakthrough AI system in the world. As the number of organizations and resources allocated to AI research increases, the probability increases that an organization will make an undisclosed AI breakthrough and use the system for potentially malicious ends. It seems important to detect this. We can imagine a lot of ways to do this — looking at the news, financial markets, online games, etc.”

That reads to me like a classic “Indications & Warning” problem statement from the “other” non-AI world of intelligence.

I&W (in the parlance of the business) is a process used by defense intelligence and the IC to detect indicators of potential threats while sufficient time still exists to counter those efforts. The doctrine of seeking advantage through warning is as old as the art of war; Sun Tzu called it “foreknowledge.” There are many I&W examples from the Cold War, from the overall analytic challenge (see a classic thesis “Anticipating Surprise“), and from specific domain challenge (see for example this 1978 CIA study, Top Secret but since declassified, on “Indications and Warning of Soviet Intentions to Use Chemical Weapons during a NATO-Warsaw Pact War“).

The I&W concept has sequentially been transferred to new domains of intelligence like Space/Counter-Space (see the 2013 DoD “Joint Publication on Space Operations Doctrine,” which describes the “unique characteristics” of the space environment for conducting I&W, whether from orbit or in other forms), and of course since 9/11 the I&W approach has been applied intensely in counter-terrorist realms in defense and homeland security.

It’s obvious Elon Musk and his OpenAI cohort believe that superintelligence is a problem worth watching. Elon’s newest company, the brain-machine-interface startup Neuralink, sets its core motivation as avoiding a future in which AGI outpaces simple human intelligence. So I’m staying abreast of indications of AGI progress.

For the AGI domain I am tracking many sources through citations and published research (see OpenAI’s interesting list here), and watching for any mention of I&W monitoring attempts or results by others which meet the challenge of what OpenAI cites as solving “Problem #1.” So far, nothing of note.

But I’ll keep a look out, so to speak.

Filed under: R&D, Technology | Tagged: AGI, ai, artificial intelligence, Defense, DoD, Elon Musk, IC, Intelligence, military, research, superintelligence, Technology, warning |

Lewis, I could not add a comment from my system….

I&W is not well understood in social norms or advanced technology. I believe there needs to be more engagement between the science & technology // R&D shops and the people that work on warning issues. This applies to both Government and is what is actually happening in many policy and investment parts of industry. I would like to tlak with you about the problem # 1

See you tomorrow at the symposium,

George

________________________________

LikeLike

George – Let’s talk about what “Solution #1” might look like…. Cheers my friend – lewis

LikeLike

Lewis:

Remember our discussion a year ago when I was describing a commercially available AI capability that would identify Snowden before they acted, mass shooters, etc?

Exact same premise but not someone using maliciously… though if acquired by an adversary… could well be used that way…

LikeLike

Rich – Yes, you’re right, very same premise. You’re ahead of the curve as always. And malicious actors? Way too many already.

LikeLike

Lewis:

Very interesting article (as always). What we can “know” using AI on the open source and available commercial data is astounding and not well understood by most of the community as we cling to historical LSIs and methods. Hopefully we will recognize the value of using research efforts like OpenAI to develop both better understanding and to be aware of the pitfalls of considering AGI the golden solution for the future.

LikeLiked by 1 person

Wise approach, Rich – I agree.

LikeLike

Lewis, I am impressed. Eternal truths, well, enduring the test of time, is, are valuable and rare. I read this post, and contemplated this,

Indications & Warnings remains fresh and relevant during the Wuhan virus pandemic, three years later. Sun Tzu might tip his hat to you.

LikeLiked by 1 person